In a previous post I introduced the Industrial Internet Consortium (IIC), the reference architecture and the concepts of trustworthiness used in their security framework. Since that post the IIC has published a whitepaper, Managing and Assessing Trustworthiness for IIoT in Practice, “to raise awareness in industry of the importance of trustworthiness, context, and assurance, how to measure, analyze and assess it, as well as how to manage and govern it.”

This approach to IIoT security is compelling because trustworthiness is a good concept to encapsulate the many concerns that build confidence in a product. The definition used by the IIC is as follows:

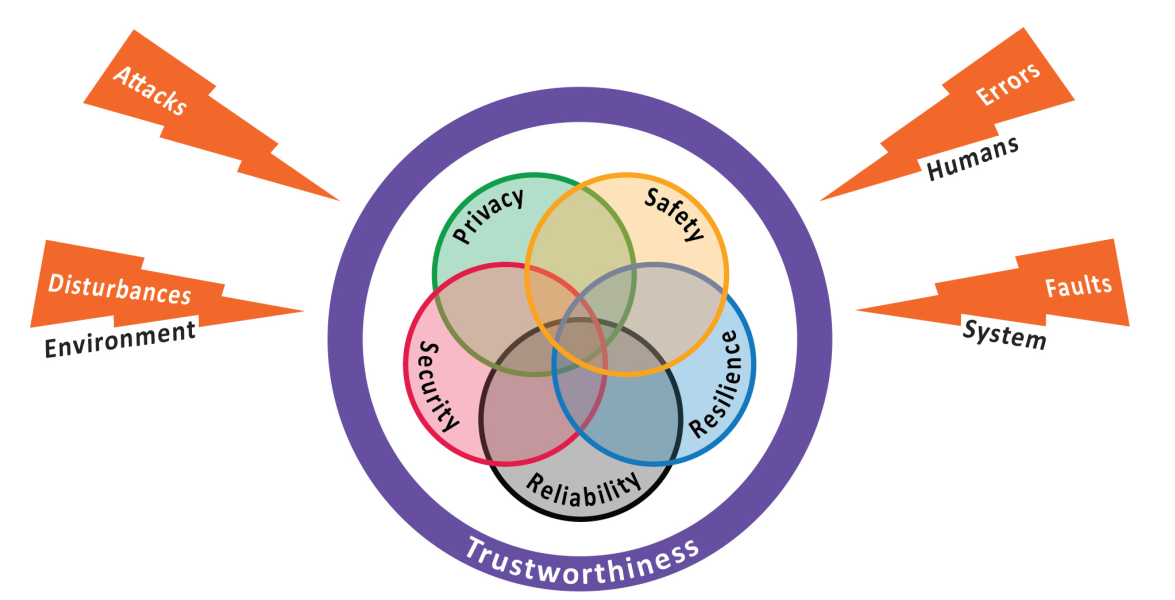

“Trustworthiness is the degree to which the system performs as expected in the face of environmental disturbances, loss of performance quality and accuracy, human errors, system faults and attacks. Assurance of trustworthiness is the degree of confidence one has in this expectation. A system must be assured as being trustworthy for a business or organization to have confidence in it.”

Trustworthiness encapsulates security, safety, privacy, resilience, and reliability into an umbrella term which aids communication when navigating the complicated world of IIoT. Trustworthiness also means that each key element must be considered in context of the others. Striving for security can’t come at the expense of safety or reliability, for example. This diagram in particular does a good job of illustrating the concept:

Source: Managing and Assessing Trustworthiness for IIoT in Practice

The Role of Static Analysis in Assessing Trustworthiness

As I mentioned in the previous post, the IIC Security Framework and the latest whitepaper discussed here do not deal with software development directly. All of the guiding principles are at a high level mention for system-wide application. The security framework states that “rigorous software development practices can help developers identify and eliminate potential safety issues and security vulnerabilities”. The new IIC whitepaper furthers this by providing best practices for assessing trustworthiness that fit with the approach to improving quality and security that we have been promoting (Managing and Assessing Trustworthiness for IIoT in Practice – Section 4):

- Baselining, which includes the gathering of basic information to input into the process,

- Analysis, during which a management team can assess how trustworthiness-related events can potentially impact a business, and real options for addressing risks,

- Implementation, during which the management team will establish trustworthiness targets and establish appropriate governance and

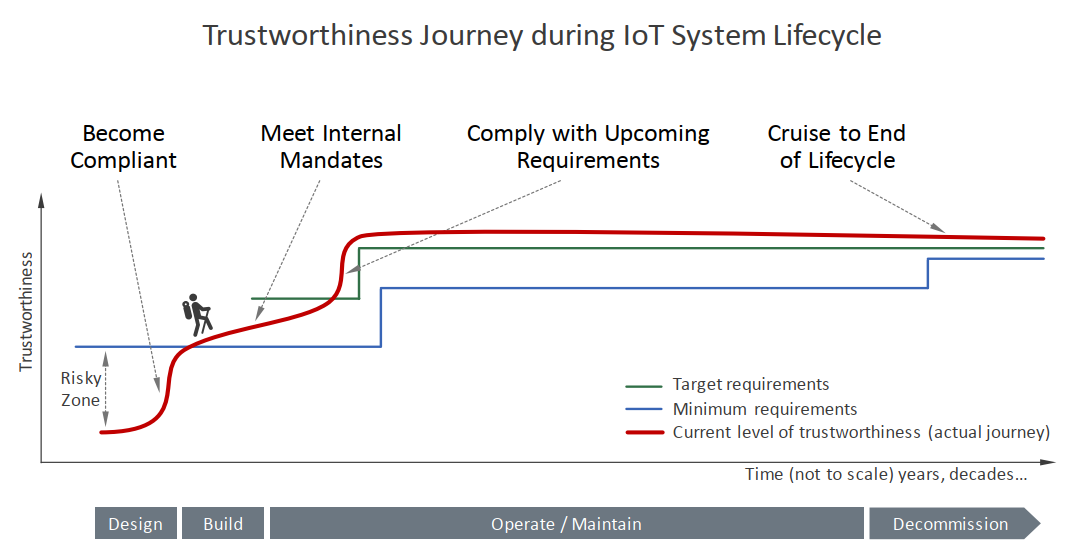

- Iterate and maintain, is key since trustworthiness is not a static concept, and trustworthiness much be managed in the context of an overall changing landscape.

Trustworthiness is built into a product as an iterative process, and again the whitepaper has a good illustration for this:

Source: Managing and Assessing Trustworthiness for IIoT in Practice

So how does this apply to software development, tools and static analysis? Despite not discussing trustworthiness at the software development level, the principles are meant to be applied throughout product development. In the context of the best practices outlined above, let’s evaluate the role of static analysis in “rigorous software development practices.”

- Baselining: The first step to adopting static analysis tools in software development is to assess the current state of the software. We discuss this in more detail in this whitepaper but establishing a baseline with an agreed-upon set of rules is the recommended starting point. The baseline is just the starting point; software teams don’t necessarily have to fix all the issues discovered at this stage. However, it’s critical to start somewhere and baseline where the software stands in terms of quality and security.

- Analysis: Ongoing analysis of baseline findings and new vulnerabilities and defects is required. A severity and priority is assigned to each and the software team decides based on these criteria which issues to deal with. Not all static analysis results are equally critical and analysis is needed to focus limited resources on the warnings with the most risk and impact.

- Implementation: Remediating discovered bugs and vulnerabilities requires review, testing, analysis and follow up. A formal approach is required to verify discovered bugs are foxed properly and don’t impact dependent components. Software teams set goals for security and quality that can be measured with the help of static analysis tools. Secure and safe coding standards may be required by the end customer which requires verification and audits with the aid of static analysis tools.

- Iterate and maintain: Iterating and improving software, tool usage and practices is key to improving product trustworthiness. Software teams can use advanced static analysis tools to evaluate their progress over time and converge on their definition of trustworthiness.

Summary

Trustworthiness combines the various requirements of IIoT into a convenient umbrella term. The new IIC whitepaper outlines how to assess trustworthiness at a system level but there are applications of this to software development. Static analysis tools are an important tool for assessing the current state of software quality and security plus provide a method to improve the software over time. Static analysis tools are well suited the iterative approach to trustworthiness outlined in these new guidelines.