Interview with Barbara Guttman, manager of the Software Quality Group at NIST, which is publishing new guidelines to support the presidential order to secure cyberspace

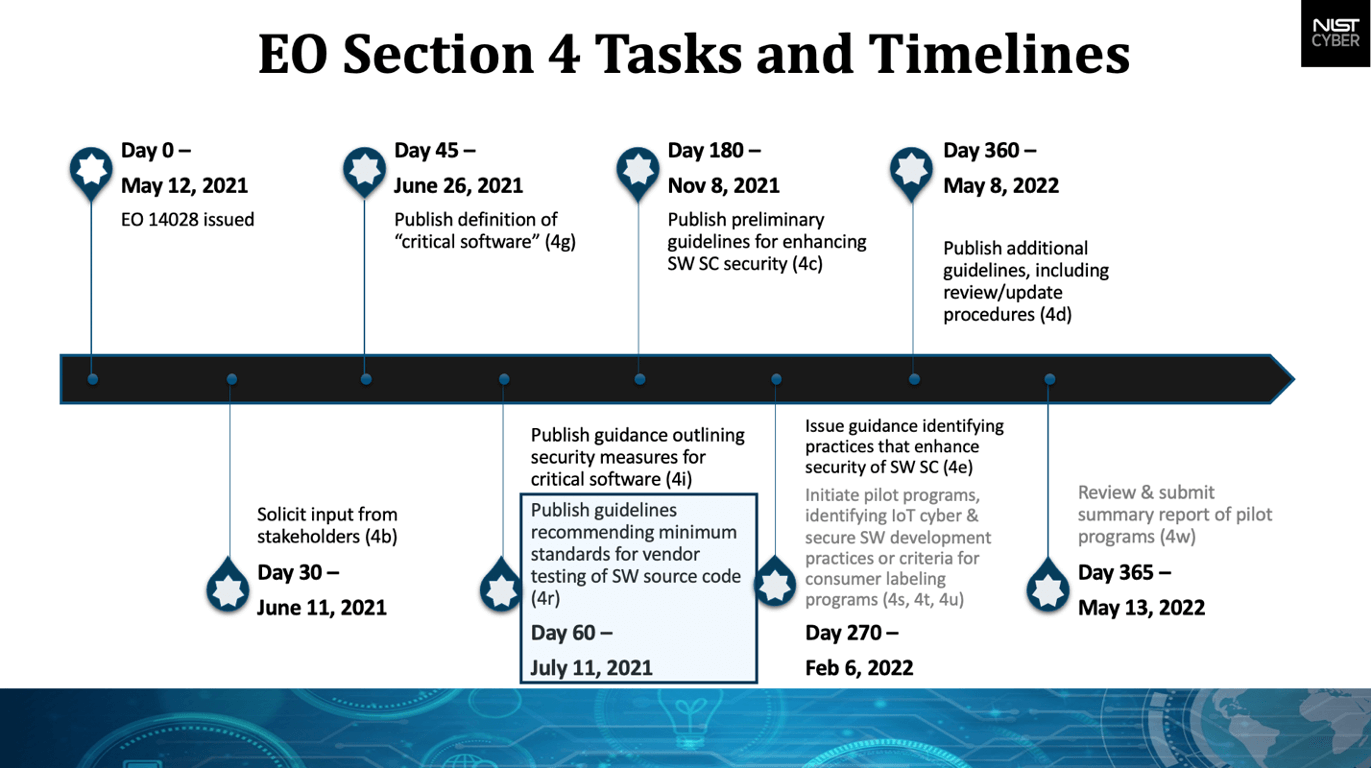

After the President of the United States signed executive order 14028 to improve national cybersecurity, NIST (the National Institute of Standards) took less than 45 days to publish its definitions of critical software in support of the order. Less than 15 days after that, NIST published guidelines for developer verification of software and components, along with minimum security measures for critical software use.

While these guidelines are focused on government systems, they’re set to become rules in 2022, and the impact will be swift and real for vendors of commercial software that fail to meet them.

NIST Guidelines Rolling Out in Support of Presidential Order to Secure Cyberspace

Barbara Guttman, manager of NIST’s Software Quality Group, is still recuperating from spearheading these consensus-based guidelines. In this interview, she took the time to break down the NIST software definition and verification guidelines, and offer advice for third party developers.

Q: How does this push for secure commercial software compare to the infamous Orange Book used by the National Security Agency to validate technology acquired by sensitive agencies?

The main differences are that this push comes from a presidential order, and that attestations are handled differently than how the Orange Book handled them. The Orange Book focused on having the NSA test software. This presidential order puts the onus of attestations onto the vendors. The whole 4e section from the presidential executive order is having vendors attest that they did good security, while 4r is about software verification and a corresponding item on vulnerability testing.

Q: How do these guidelines impact developers and third-party software vendors?

From a developer point of view, if you’re not familiar with the secure development and testing techniques laid out in our guidelines, you need to get familiar with them. Eventually the federal government will ask, before we buy software, ‘Do you have integrity checking? Do you have provenance? Do you have a Software Bill of Materials [SBOM]? Did you use a secure development methodology?’ This is basic goodness for developing higher quality, more secure code.

The overall intention is, if the government is going to buy a piece of software that is critical, we want to make sure some basic security is conducted during its development.

Q: There’s a lot packed into these guidelines. Can you break the guidelines down for us?

To get started, I recommended following the minimum standards for developers, which covers threat modeling, automated testing, static analysis, dynamic analysis and other basics of DevSecOps. This document is just about verification minimum requirements, but that should be in the context of a secure development process.

NIST is also defining what artifacts to look for and to attest if this security measure has happened for our February deliverable. For example, it might ask a vendor to attest that they looked for hardcoded passwords, which is in the minimum standards document.

Pro Tip: GrammaTech’s latest version of CodeSentry introduces software supply chain security, which creates automatic SBOM attestation, identifies open source components, detects 0-day and N-day vulnerabilities, and builds executive risk reports.

Q: How can software providers meet the attestation requirements set forth in the guidelines?

The Software Bill of Materials from the NTIA is tied into the presidential order. The SBOM is effectively a nested inventory, a list of ingredients that make up software components. It’s important to know what software you included, then record that and keep up with the CVE’s [common vulnerabilities and exposures] associated with that third party and/or open source code.

Binary analysis can help us know what is in software. For example, I run the National Software Reference Library [NSRL]. We take each product and break it down into as granular a piece as we can, and then we publish the hashes of the files we broke it down into. So, the NSRL actually knows what’s in the binaries of the products in the library. If an agency finds a vulnerability in ‘File X,’ the NSRL can tell you how many other software products include that file. We discover all of this through the binaries, not the source, which we don’t have access to.

Q: How can developers get more involved in the NIST guidelines working groups?

We will presumably have more public workshops to get input about smart ways to go about promoting software security. In our first workshop, which we only had two weeks to promote, we had good ideas there. We met some exciting new people – mostly software vendors and developers. We also asked for position papers, and we published them on our site.