Balancing Application Security Testing Results and Resources

This post looks at how you can make SAST (Static Application Security Testing) faster and why it’s important. The performance of SAST tools affects their success as they are introduced into developer workflows, and subsequently influences their total cost of ownership. SAST tools need to be fast enough to not noticeably degrade workflow efficiency, but they must also give actionable results.

The examples given below are from GrammaTech CodeSonar, but the advice herein is generic.

Integrating SAST into DevSecOps Workflow

The focus here is integrating SAST into DevSecOps—the integration between development, security, and operations within the SDLC, particularly in some form of CI/CD (Continuous Integration/ Continuous Deployment) process. In these modern, continuous processes, when code is written and before it’s committed to the repository, it’s run through testing, which may be unit testing, regression testing, or static application security testing. The goal of this post is to discuss techniques to reduce the impact of SAST in this process. Certainly, optimizing the testing aspects goes hand in hand. In this context, reducing analysis time means reducing calendar time.

The benefit of SAST and why it fits so well with DevSecOps is the real-time feedback as developers create and make changes to the source code before they submit it. The amount of time that’s acceptable in this kind of workflow is 15 minutes to 30 minutes maximum, with some allowance for how big the change is. SAST in a short timeframe is important. Fast feedback means quicker turn around and more progress on features. The business value lies in those features. Testing makes sure they work.

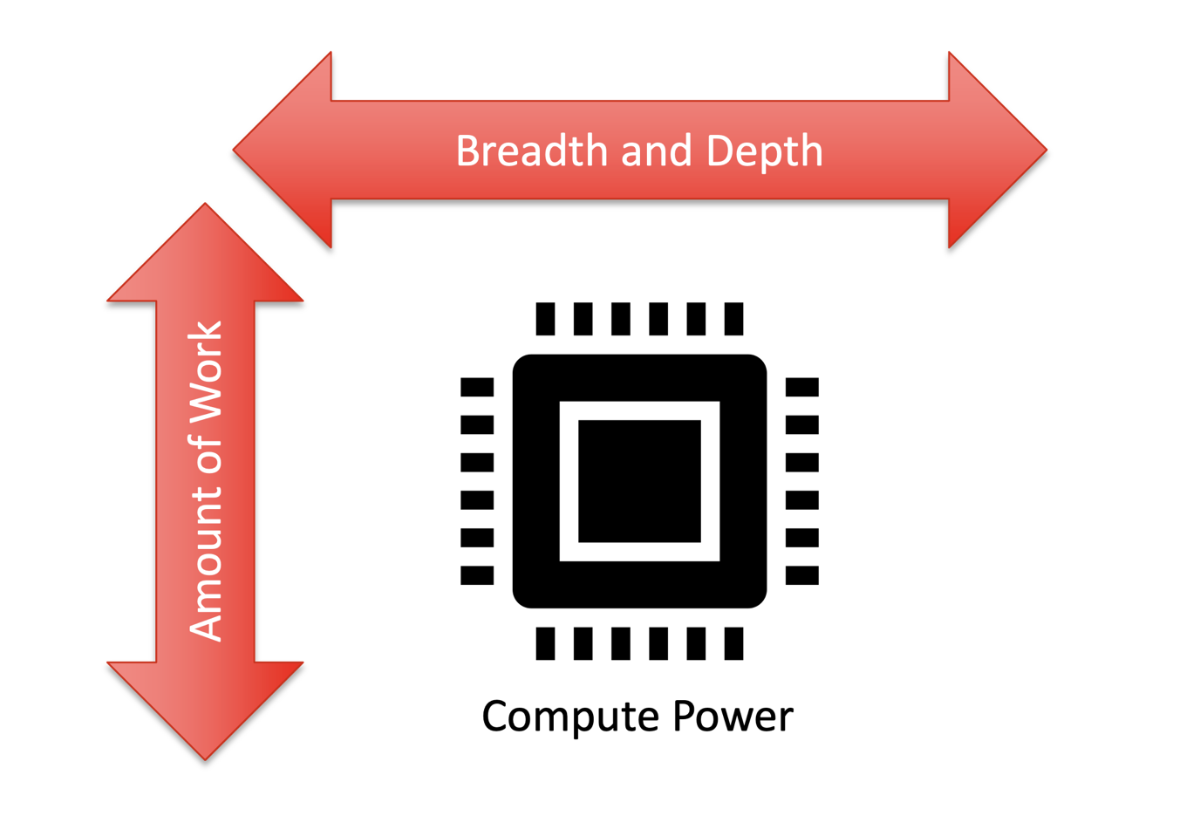

Depth versus Breadth

The checkers used by SAST tools are not created equal, at least in terms of computation power needed. The time for analysis is directly related to the complexity of the checkers used to detect certain types of vulnerabilities. Typically, coding standard enforcement rules are easier to compute versus complex tainted data analysis used to detect command or SQL injections, for example. Therefore, there are tradeoffs made in the types of bugs and vulnerabilities to search for. When developers are coding, it makes sense to optimize the compute time for SAST to reduce any delays. During software builds, more time and compute power is available so depth and breadth can be increased to catch complex vulnerabilities.

Compute Power

SAST tool analysis time scales well with compute power. The availability of more computing resources, such as CPU and memory, can impact the time of analysis. Bigger is better in this case and investing in additional hardware for SAST can pay off in terms of productivity.

Codebase Size

The SAST tool’s analysis time is also dependent on the amount of source code analyzed. Depending on your application, your code base is a factor in determining what depth and breadth of analysis is optimal.

There is some correlation between small code base size and strict requirements for SAST such as in safety critical software. It’s expensive to build and test safety critical code. However, these products need to conform to industrial coding standards and rigorous levels of testing and analysis. SAST tools are required in these cases and deep analysis is required to fully cover possible bugs and vulnerabilities. Moreover, a wide breath of analysis is needed for not only coding standard enforcement, but also to get a wide variety of defects.

Larger and extremely large code bases require a trade-off in terms of depth and breadth of analysis. Prioritizing the types of defects and vulnerabilities to concentrate on is critical.

There are various controls that affect SAST analysis time. These include breadth and depth of analysis and the available compute power to perform the analysis.

One way to look at the choices to be made to reduce overall analysis time is in the context of three levers: two that reduce the depth and breadth, which control the amount of work; and the third lever is the amount of compute power. In this post, we are assuming computing resources are fixed and the focus is on reducing the amount of work. How can I make the SAST tool do less work and get results quicker?

Steps to Speeding up SAST

Speeding up SAST means reducing the amount of work. The most intensive operation is a full analysis, and by full it means the entire source code base. Just as full compilation from scratch takes a long time, the same is true of SAST analysis. This is the maximum amount of analysis time and the maximum to be expected from your SAST tools.

To reduce this number other approaches are needed, and they follow the same techniques used to avoid compilation in large C/C++ projects. The first is incremental analysis. Then with well defined, loosely coupled, component-based architectures it’s possible to do component analysis. Then the final step to speed up SAST is developer analysis.

Incremental Analysis

Incremental builds are a major factor in reducing developer build times for C and C++ projects. Small changes don’t require the entire code base to be recompiled. It’s impractical and unnecessary. When a developer modifies something, then what’s needed to be recompiled gets recompiled automatically, and the build infrastructure handles it.

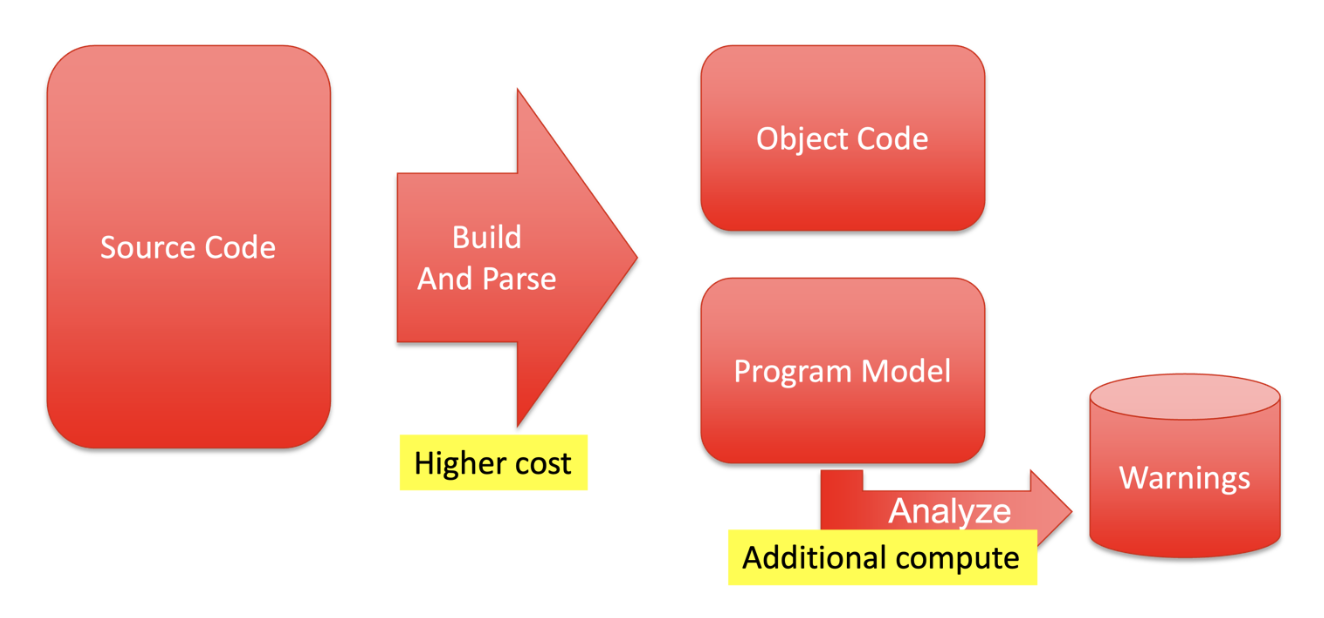

SAST tools work in a similar manner. As source code is being compiled, the SAST tool parses the same source code and creates a program model. The next phase is analyzing that program model against the configured checkers and generating warnings. The warnings typically go into a database for later analysis and can be forwarded to additional development tools for review.

SAST tools perform analysis much like a compiler but need to perform addition analysis on the program model created during the build.

During the build of the software, the SAST tool is run in parallel. Although like a compiler in operation, SAST tools musts do more work than the compiler, hence take longer to finish and provide results. The amount of code needed to recompile for each change directly impacts analysis time.

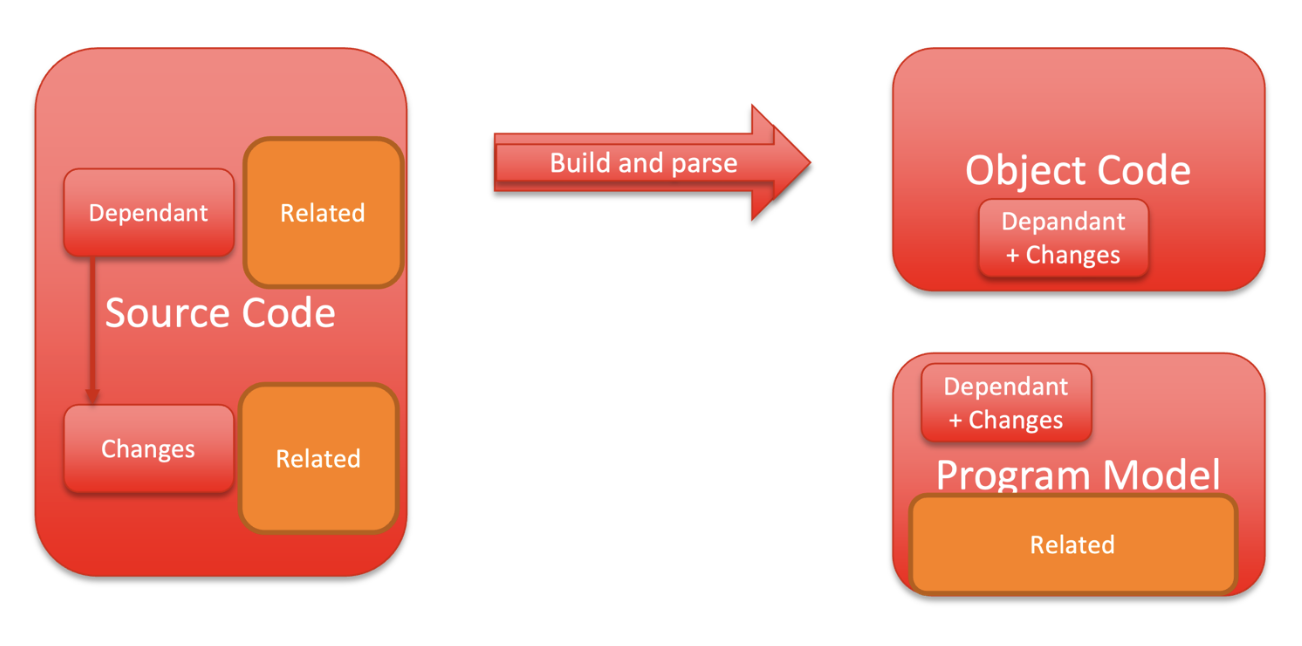

The work to analyze an incremental build is a lot more work than just analyzing the changes. SAST tools do analysis via abstract execution of code flow through multiple different compilation units. So even though a single file is modified, the calls it makes to other files need to be analyzed as well. Incremental analysis doesn’t increase the build and parse workload, but the analysis scope will be a lot bigger than expected. So, whenever you do an incremental build a SAST tool will need to reanalyze the dependencies and changes, and related bits and pieces around those changed units.

Incremental analysis performs analysis only on changed units and related dependencies.

So incremental analysis is a great way to reduce the amount of work, but it may have some unintended consequences with regards to analysis time afterwards. However, it remains a good option for reducing SAST analysis times. It’s also an easy option to adopt since the capability is built into SAST tools like CodeSonar.

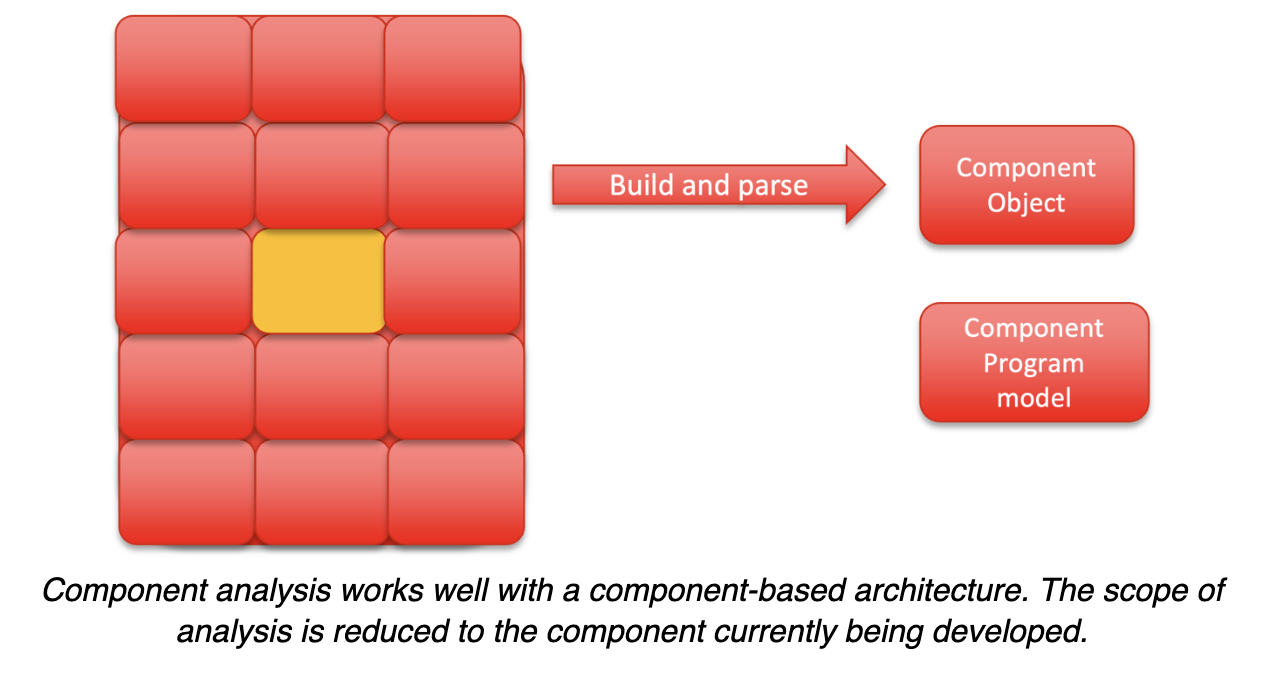

Component Analysis

Component analysis depends on a component-based architecture. If your software has a loosely coupled, component-based architecture with well-defined interfaces, it’s possible to isolate both the testing and the SAST analysis to the component where changes are made. Just as this type of architecture simplifies many aspects of development, it pays off with SAST as well. It’s possible to analyze the component in isolation and get good results.

Developer Analysis

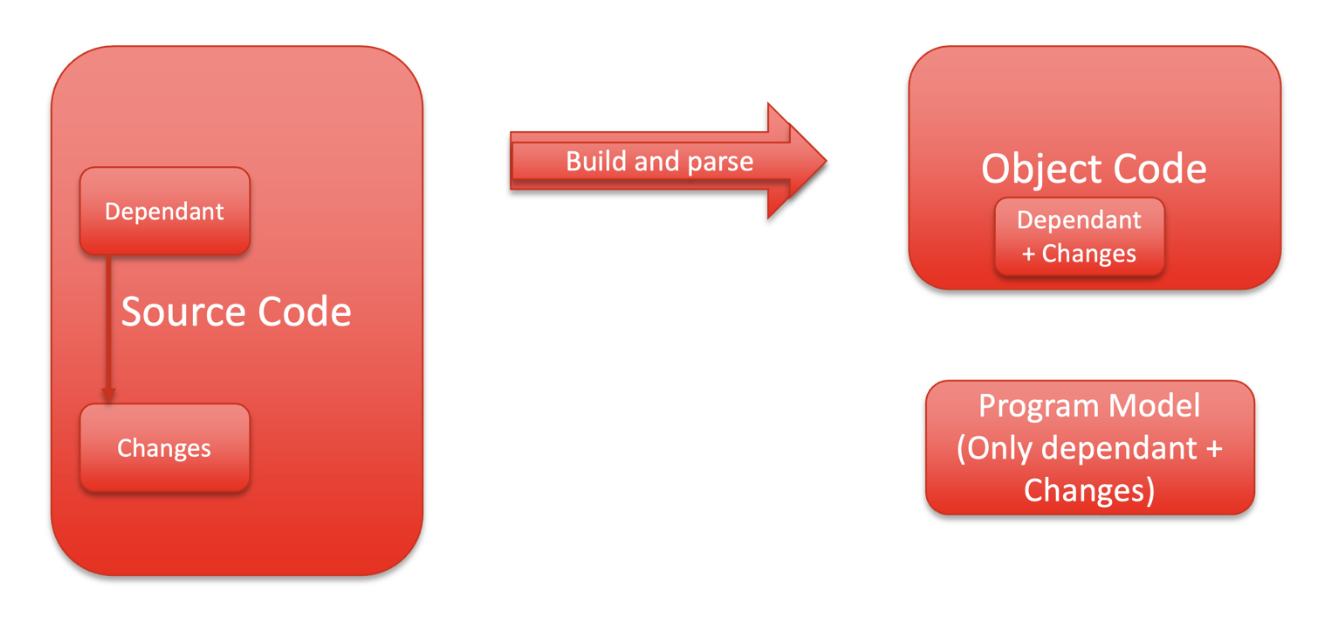

There is still the opportunity to further reduce the analysis workload even if you are developing software with a well-defined, component-based architecture. The concept is to start the build without SAST, which removes the burden of the extra computation needed. Presumably, this building will be available quickly.

Unlike incremental analysis, the first full build is done without SAST, but subsequent builds are, so the program model is a lot smaller and build speed is much faster.

However, there is an increase in false negatives due to the smaller scope of analysis since only files that changed are analyzed. This smaller scope means that all possible dependencies are outside the program model and are not considered in the analysis. However, this rapid analysis works well with coding standard enforcement, for example.

Developer analysis is local to the developer’s current scope of work and usually done within their development environments.

Where to Start?

So far, the concepts for reducing SAST analysis workload have been introduced. The next question is where to start? First and foremost, start with a full build and analysis. This sets both a baseline in terms of detected bugs and vulnerabilities but also a performance target for reducing build time. This step will determine exactly the number of lines of code you have, how long the analysis takes and its impact on build server load. These metrics should drive the analysis optimization effort.

Given the options, the first attempt should be a component-based analysis. If this works well, it’s still possible to further reduce analysis with incremental and developer analysis within components. This approach can be integrated into the typical developer workflows.

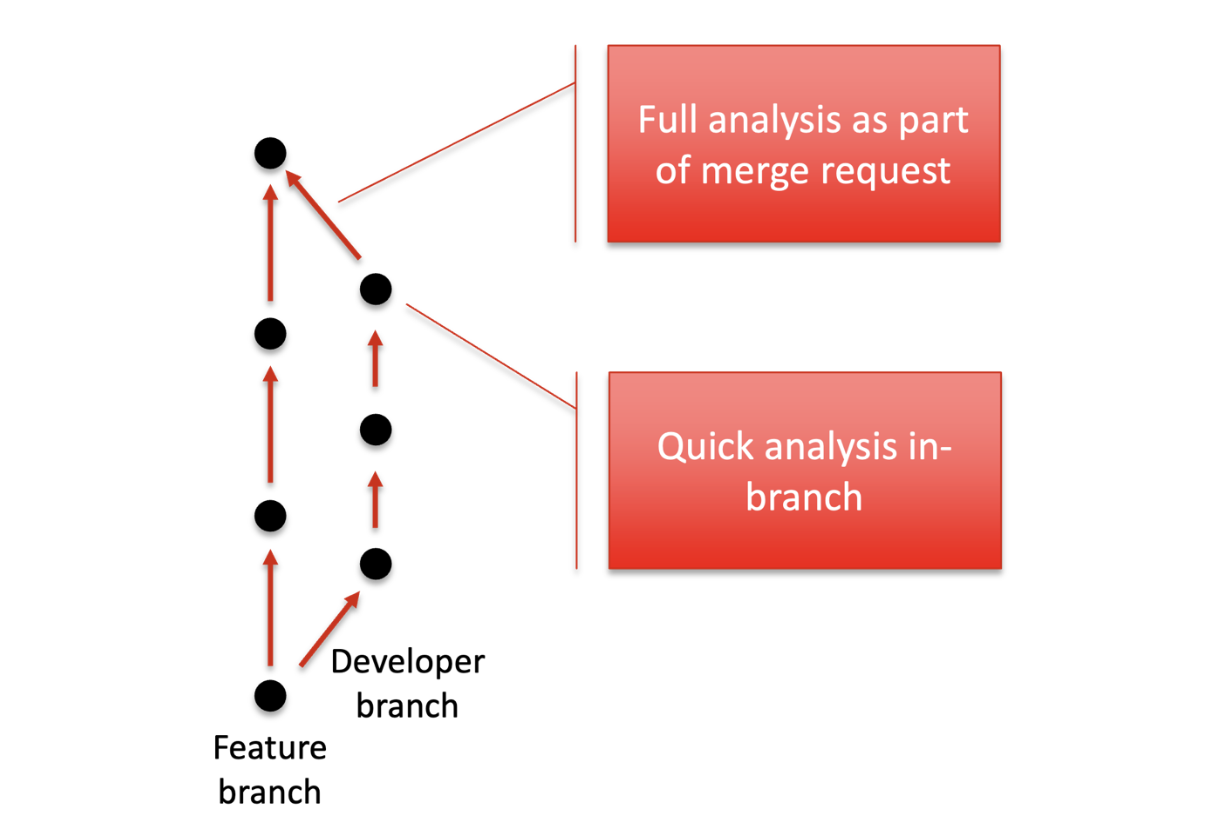

When creating a developer branch for implementing changes, quick developer local analysis will catch local errors. These tend to be the largest set since they represent the largest part of the code changes. When enforcing coding standards, this is the point where violations are caught and displayed. A full analysis is recommended on a merge request to catch the remaining defects related to cross-component-boundary behavior. In the case of CodeSonar, all the warning states and annotations are kept across different scopes of analysis.

Developer analysis works well within a developer branch. When merging, a full analysis is recommended.

Summary

Speeding up SAST equates to either reducing the amount of work the SAST tool must do or increasing compute power to do the analysis in a shorter time. Assuming computing resources stay fixed, however, there are several approaches to reduce the SAST analysis scope which reduces time to complete while still producing excellent results.

A complete analysis of all the sources yields the best and most precise results and is still recommended at certain points during development. However, component analysis works well in software that follows a well-defined component architecture. Combing incremental or developer (local) analysis with this approach provides a good compromise between precision and analysis time.

Read at DevOps.com