The minute I heard about Heartbleed — the bug in OpenSSL responsible for the worst security vulnerability in years — I downloaded the source code and ran CodeSonar to see if it would find the defect. Unfortunately it didn’t. A little digging into the code confirmed my suspicion that the paths through the code to the offending statements were long and involved access to data through many levels of indirection. It didn’t help that there were lots of function-pointer calls in there too. The complexity of the code appeared to defy precise analysis.

I wanted to understand exactly why, but the Heartbleed story was still developing and we were busy finalizing CodeSonar 4, so I put it aside for a few days. (A good description of why static analysis tools had trouble with Heartbleed was written by James Kupsch and Bart Miller and can be found here.)

The Hunt for Heartbleed

A couple of days later, John Regehr had an article on his blog about it. He had been taking his students through the Coverity scan of OpenSSL and noted that it had not been found by that tool either. However, Andy Chou of Coverity then followed up with a discussion that showed how their tool could use a heuristic to detect Heartbleed. It looked for byte-swapping operations as indicators that the values being manipulated came from a network connection, and then tagged those values as being tainted. With that knowledge, their tool reported a tainted buffer access at the offending call to memcpy().

I wish I had thought of this heuristic myself, because it is a very clever illustration of how educating a static analysis tool with a little domain knowledge can yield big rewards. We were quickly able to confirm that CodeSonar could use the same heuristic to find the bug, but it bothered me a bit because it felt like a fragile approach to the problem. To be robust and applicable to other code bases, the tool would need to be able to reliably identify byte-swapping statements in general.

The macro that’s used to do the byte swapping in OpenSSL is not a standard one, so matching on the name of the macro will work fine for that program, but not for others. The alternative is to match the statements to which the macro expands. There are probably a dozen or more plausible ways to do a byte swap but for this to be effective, the static analysis tool must either know about all of them in advance, or have some other way to conclude that a byte swap is going on. And what if the code was compiled for a big-endian platform where the byte swap is a no-op? Or if the serialized form was in some other form that did not need a byte swap? There had to be a better way.

Finding Heartbleed the “Right” Way

We had been in the process of implementing a new warning class in CodeSonar, Tainted Buffer Access, which, in principle, includes Heartbleed. This checker is designed to detect such bugs the “right” way, that is by finding where the taint sources are and by following the taint through the code until it makes it to the point where the bug triggers. With Heartbleed looming large, I asked team members Drew DeHaas and David Vitek to see if our unfinished prototype implementation could detect it.

OpenSSL is not an especially large program but it is fairly complicated, so to keep things manageable we focused on analyzing a subset of the code that exhibited the problem. After a few days of investigation, we found the following:

- We confirmed that the analysis was resolving the indirect function calls correctly, and that taint information was being propagated through those calls. This turns out to be a critical factor because the calls to malloc() (where the size of the buffer is determined) and read() (where the tainted data originates) are both through function pointers.

- Taint propagation can be tricky. There are lots of obvious ways that taint can propagate, but we had missed a few subtleties.

- We have many tunable parameters that allow users to trade analysis performance against thoroughness. These defaulted to settings biased for better performance, so the analysis was not exploring all it needed to.

These were fixable problems though, so we went back to work.

And yesterday, Drew sent me an email with the following subject line:

Subject: Heartbleed detected by CodeSonar

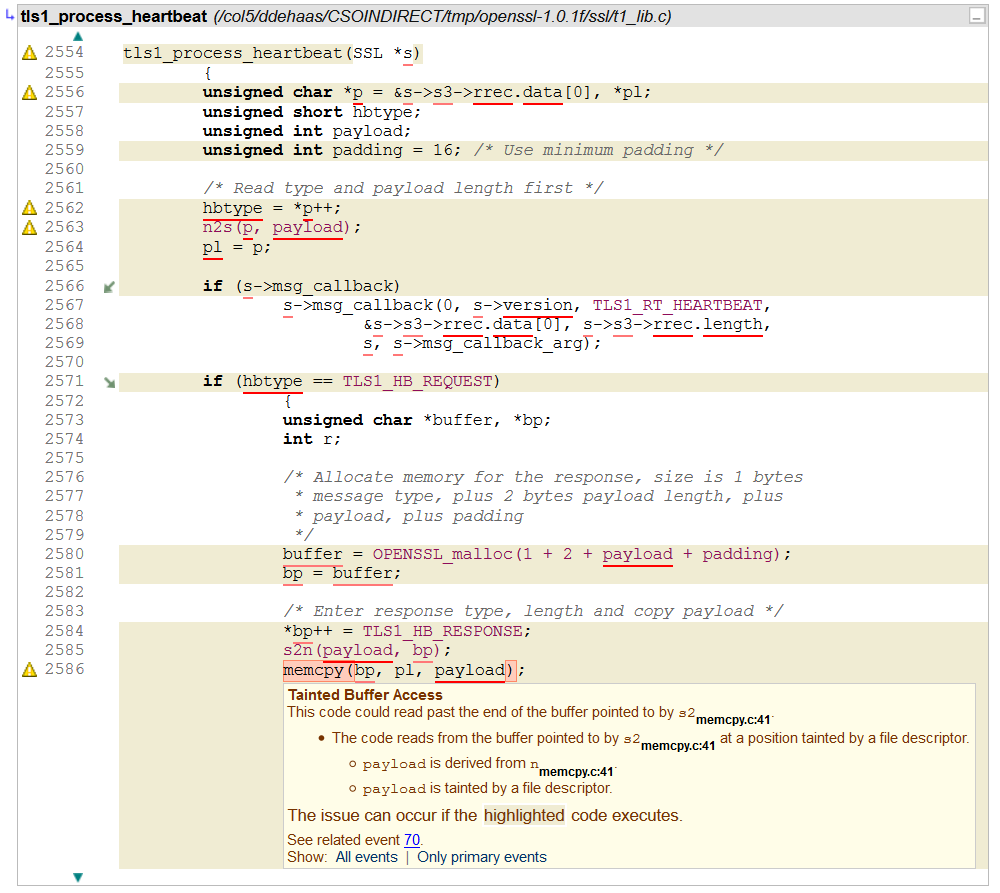

Sure enough, CodeSonar was reporting a Tainted Buffer Access at the call to memcpy(). Here’s a screenshot that shows the last part of that path:

This isn’t presented perfectly; the explanation refers to the formal parameters to memcpy() instead of the actual parameters, but that is reasonably easy for us to clean up. Note the red underlining though — this indicates that the variable is tainted. We track different kinds of taint and this color indicates file descriptor taint. This can be traced back where the taint originated at the call to read().

What have we learned?

I should also stress that this work is still in an experimental stage. The screenshot above is from the analysis of the subset of OpenSSL, and while we have detected it in the full version too, we are still working to find the right combination of techniques and parameters that will find it reliably, with reasonable performance and precision, and that will produce a report that users can understand relatively easily.

Despite the fact that a lot of work on this remains to be done, it is clear that the bug is being detected for the right reasons. The key point is that the techniques we used to implement this new checker were developed and were approaching usability before Heartbleed was discovered, which is a testament to their generality.

Challenge problems like these are good for the industry. While some might complain that we are shutting the barn door after the horse has already bolted, the truth is that there are almost certainly plenty of horses left in the barn. It is unlikely that Heartbleed is the last serious security bug in existence. Having very concrete examples of bugs that really matter is what spurs tool vendors like us to continuously improve the technology. These improvements should help us all avoid the next big security vulnerability.