During the International Working Conference on Source Code Analysis & Manipulation (SCAM), a GrammaTech research publication was awarded the Institute of Electrical and Electronics Engineers (IEEE) Computer Society TCSE (Technical Council on Software Engineering) Distinguished Paper Award.

The paper, “Automated Customized Bug-Benchmark Generation,” describes Bug-Injector, a system that automatically creates benchmarks for customized evaluation of static analysis tools. This work was motivated by a desire to improve performance measurement of static analysis tools. Usually, tools are evaluated against established benchmarks. However, these have proven incomplete for evaluating modern, sophisticated tools.

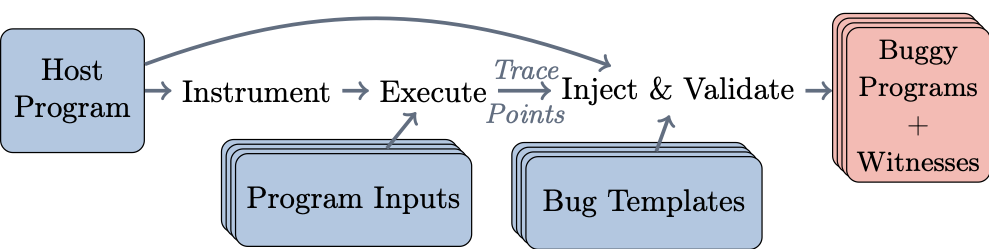

Bug-Injector operates in a pipeline with three stages: instrument, execute, and inject & validate, as shown below.

Bug-Injector takes three inputs: (1) a host program in source format, (2) a set of tests for this program, and (3) a set of bug templates. It attempts to inject bugs from the set of bug templates into the host program, and returns multiple different buggy versions of the host program. Each returned buggy program variant contains at least one known bug (the one that was injected), and is associated with a witness—a test input which is known to exercise the injected bug.

Injected bugs are used as test cases to build a static analysis tool evaluation benchmark. In the benchmark, Bug-Injector pairs every injected bug with the program input that exercises that bug. Our team identified a broad range of requirements for bug benchmarks; with Bug-Injector, we generated on-demand test benchmarks to meet these requirements. Bug-Injector also allowed us to create customized benchmarks suitable for evaluating tools for a specific use case (e.g., a given codebase and class of bugs). Our experimental evaluation demonstrates the suitability of our generated benchmarks for evaluating static bug-detection tools, and for comparing the performance of different tools.

More information about this work is available in our paper, linked above. In addition, we have published a white paper: “Bug Injector: Generation of Cyber-defence Evaluation Benchmarks.”

Interested in learning more?

Read our white paper “Bug Injector: Generation of Cyber-defense Evaluation Benchmarks.”

{{cta(‘502eb2d8-5185-4528-9603-c8fe31dd42a7’)}}

Bug-Injector research was sponsored by the Defense Advanced Research Projects Agency (DARPA) under Contract No. D17PC00096 and the Department of Homeland Security (DHS) Science and Technology Directorate, Cyber Security Division (DHS S&T/CSD) via interagency agreements HSHQDC-16-X-00076 and 70RSAT18KPM000161 with the Department of Health and Human Services (HHS) resulting in contract No. HHSP233201600062C. The views, opinions, findings, and conclusions or recommendations contained herein are those of the authors and should not be interpreted as necessarily representing the official views, policies, or endorsements, either expressed or implied, of DARPA or DHS.